How to Use LLM Vision to Analyze Camera Images and Video in Home Assistant

How to setup and use LLM Vision in Home Assistant. This can analyze images, videos, live camera feeds, and Frigate events for providing useful (and hilarious) information about your smart home.

Introduction

I’ve already made videos on this channel about how you can use Generative AI in your smart home with Home Assistant. However, in my prior testing, Gen AI was unreliable in carrying out my smart home commands, and in some cases it even freaked out my family. But this time it’s different. I’ve got something that I’m now using every single day, and my wife and I both love it.

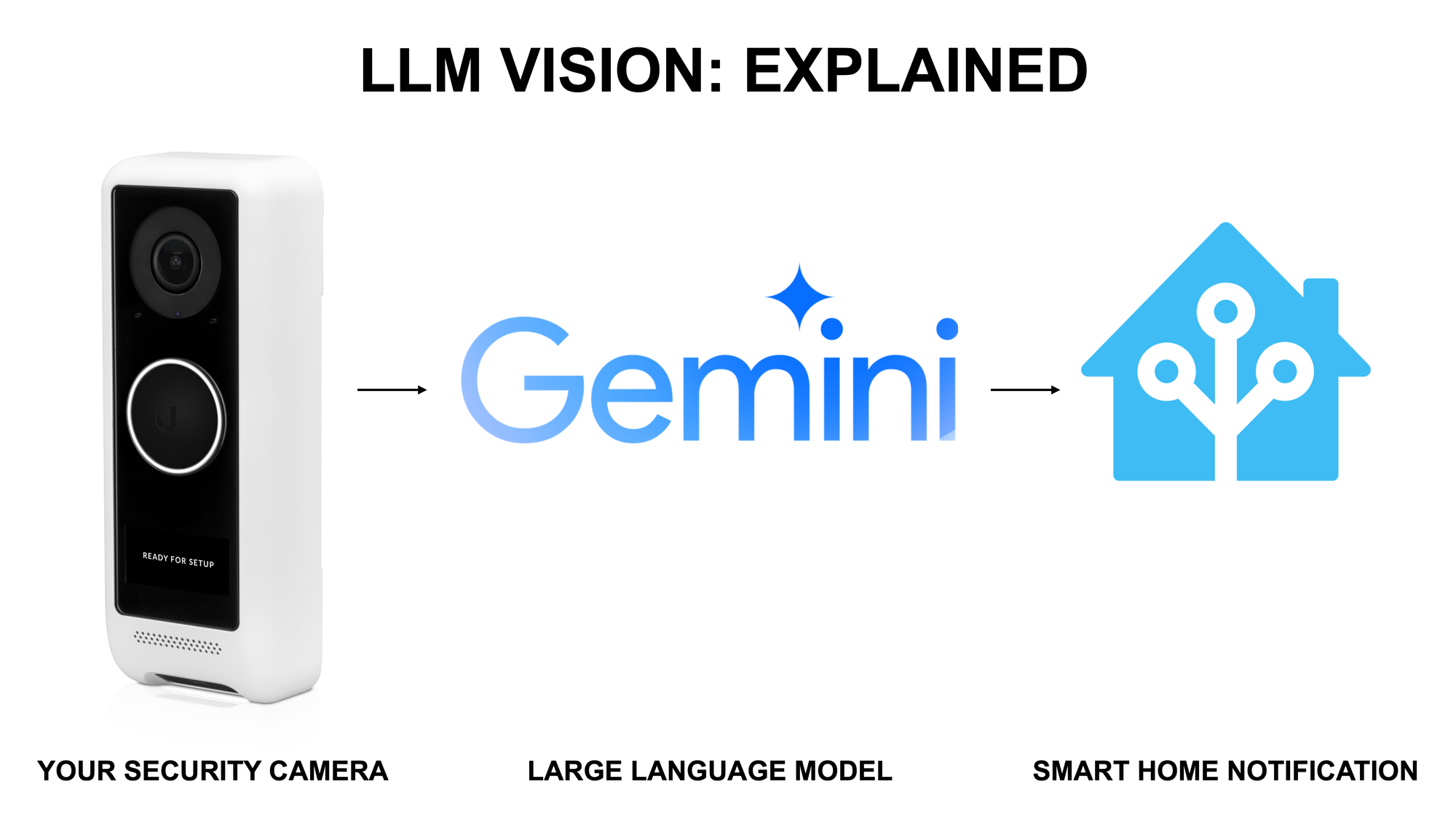

I’m going to show you how to share camera footage — like from a video doorbell — with a large language model, or LLM. The LLM will describe what is happening in a camera image or stream, and then send you a message with that description. There are so many exciting (and fun) things you can do with this, and I’m going to show you how to make it happen.

Main Points

LLM Vision

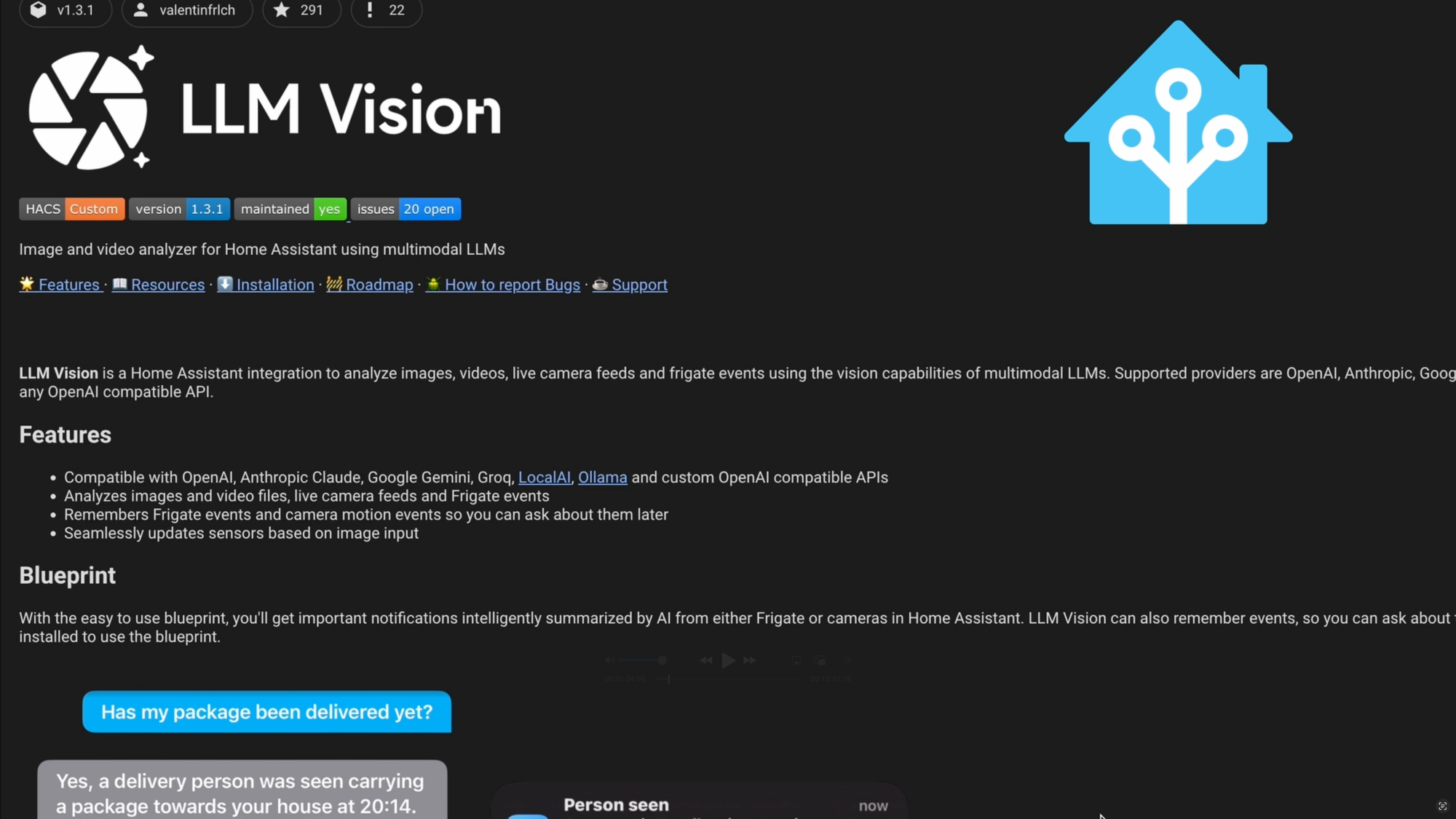

Alright, let’s take a look at how to set this up, and how I’m using it in my smart home. This whole thing is made possible by a HACS integration for Home Assistant called LLM Vision.

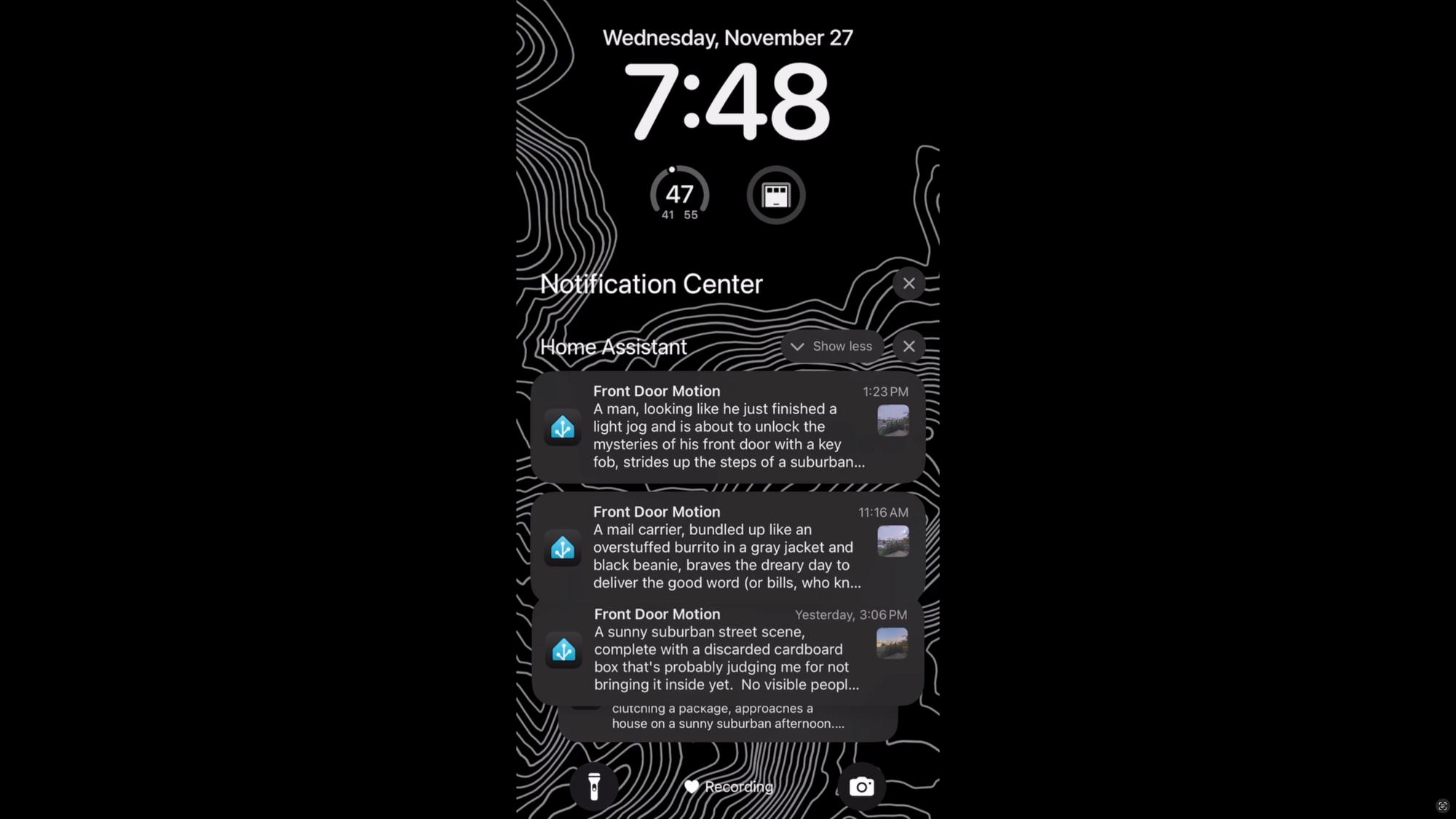

You can use it to analyze images, videos, live camera feeds, and Frigate events thanks to the vision capabilities of LLMs. This means it can describe the number and appearance of people appearing on a camera, identify license plate numbers on cars, and a ton more. After seeing this, you’ll realize that getting a notification saying something like “Front Door Motion” feels like a thing of the past. Instead, you can get a notification telling you, “A man carrier delivers mail to the front door.”

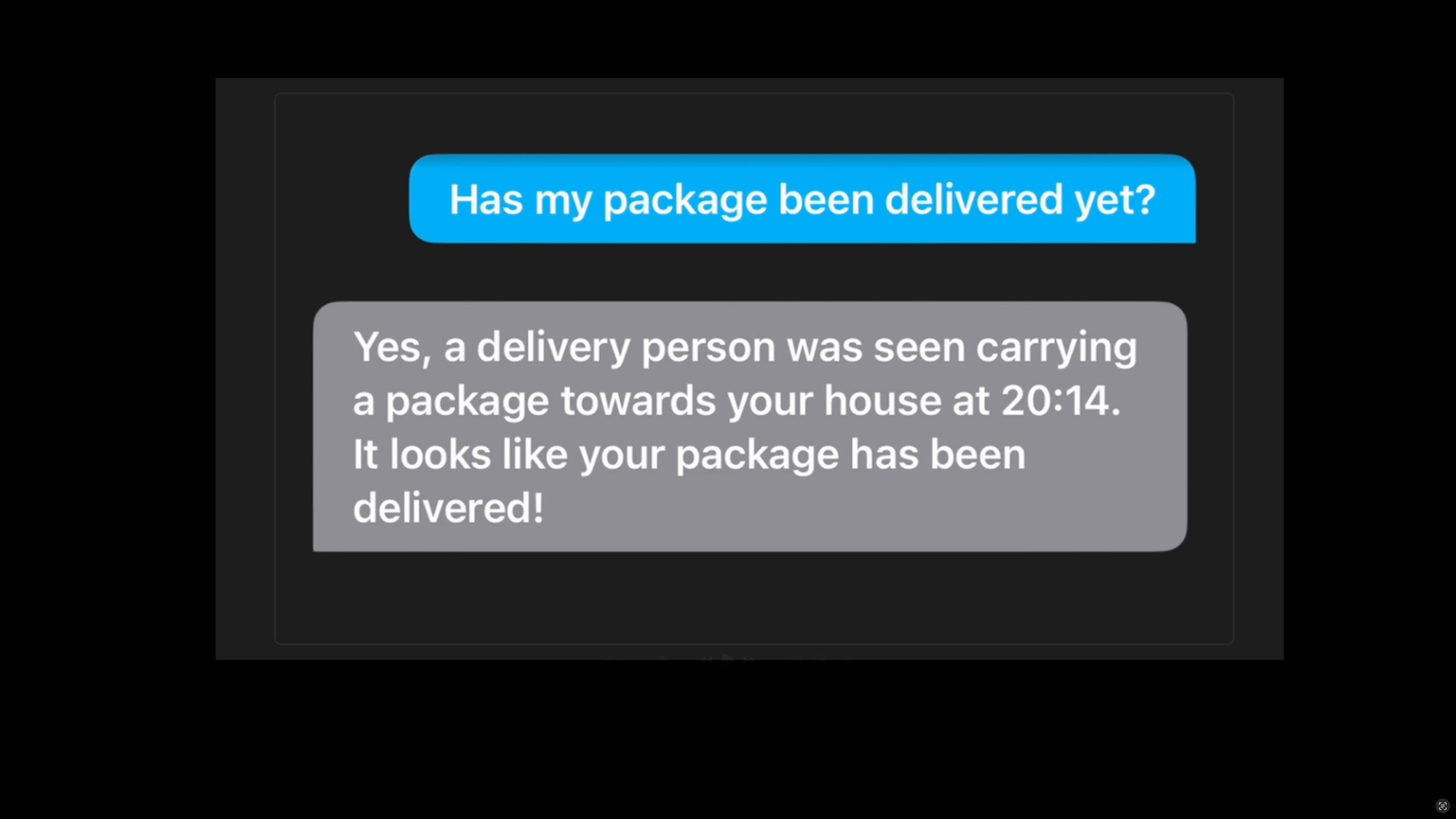

You can even tell the LLM to remember camera events so you can ask about them later. For example, you could ask your smart home, “Has my package been delivered yet?” or “Have you seen the dog (or cat) outside?”

LLM Vision is a HACS integration for Home Assistant

Setup

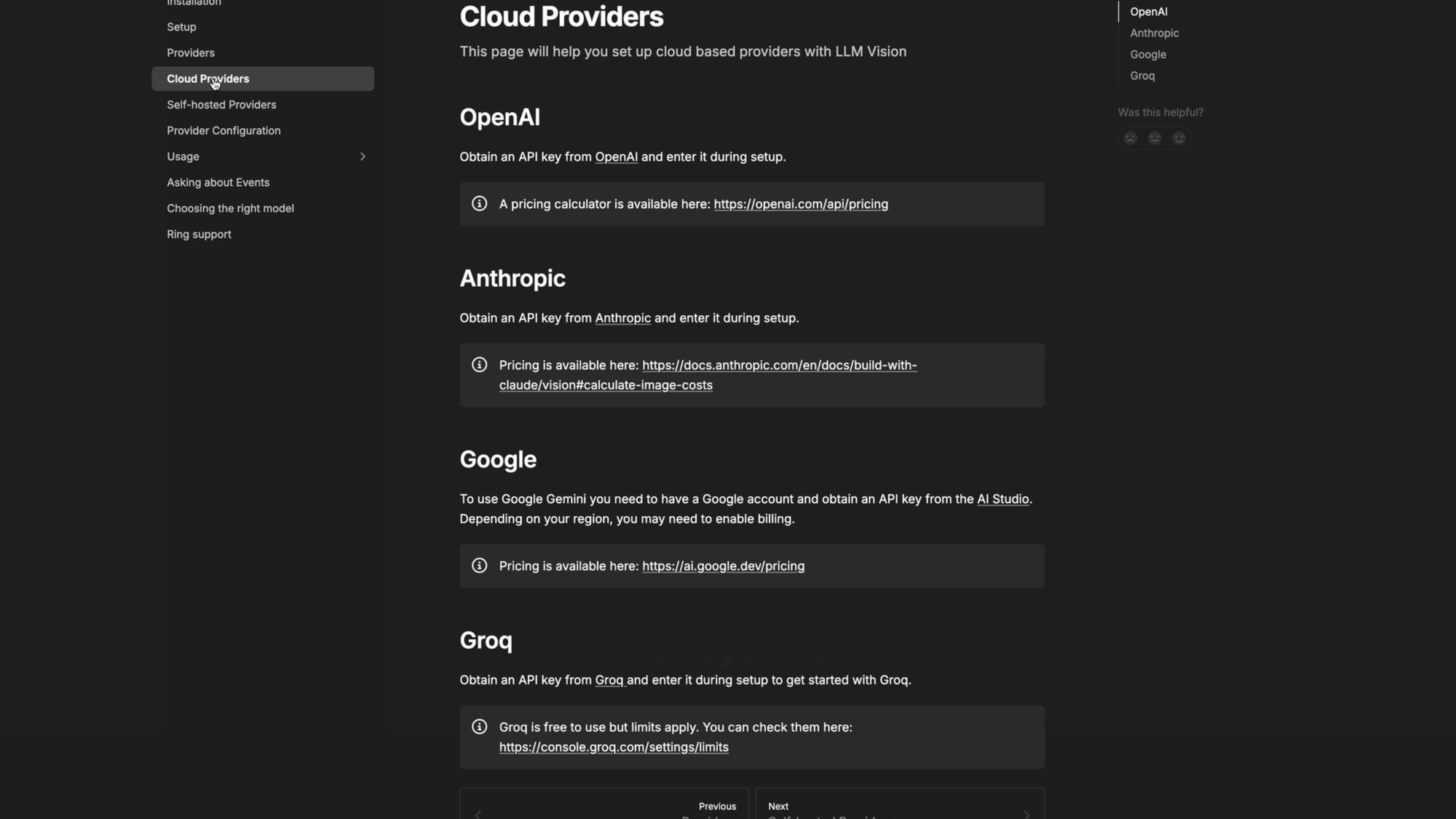

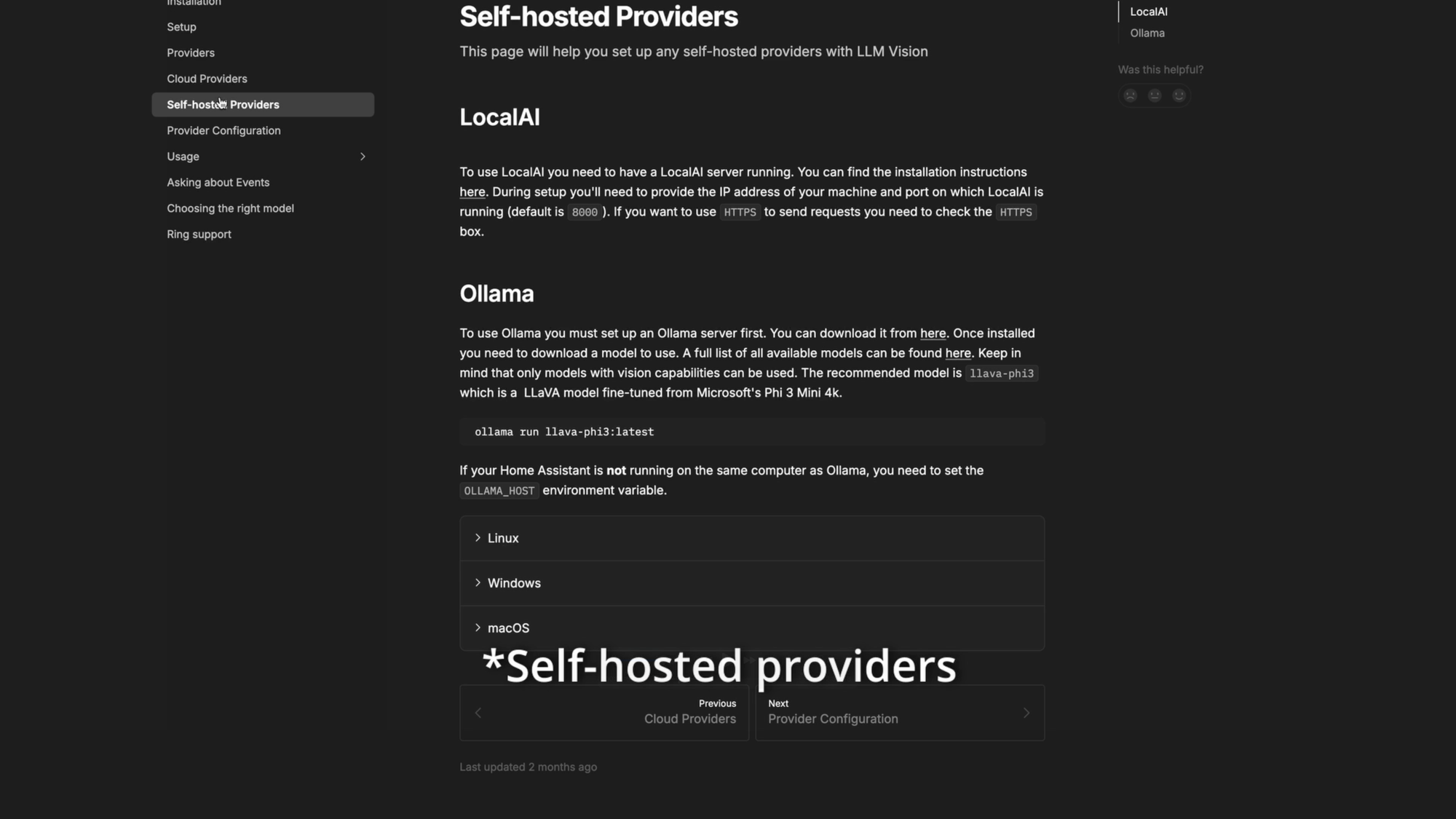

For the easiest setup, it works with cloud providers, like OpenAI, Anthropic, Google Gemini, and Groq. But you can also use it with local providers on your own hardware, like LocalAI and Ollama.

LLM Vision is compatible with OpenAI, Anthropic, Google, Groq, LocalAI, and Ollama

Adding LLM Vision

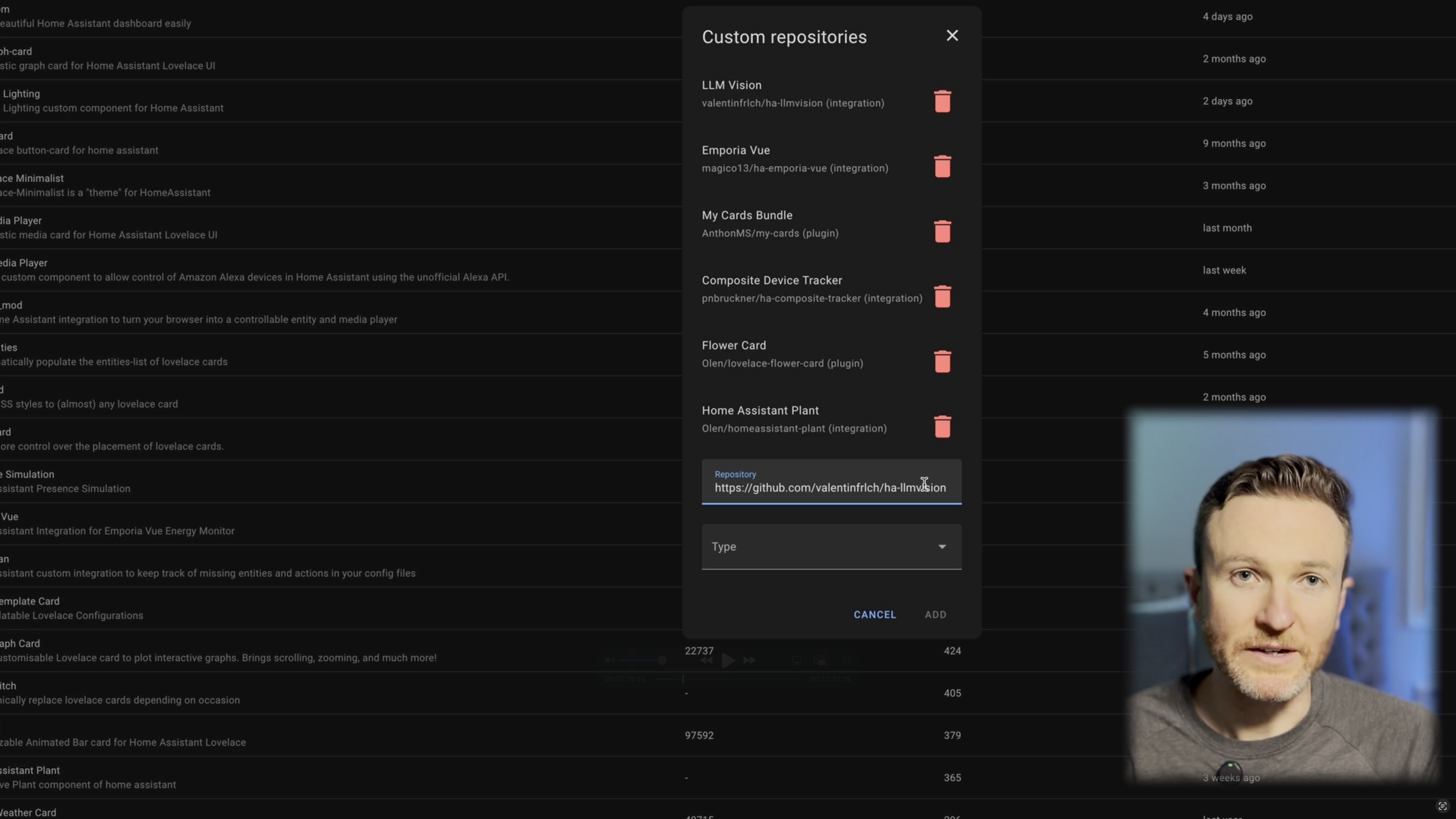

- To get started, visit the Home Assistant Community Store (HACS) in Home Assistant.

- Click the three dots in the upper right > select Custom repositories > paste in this GitHub repo > choose Integration for Type > Click Add.

- Open the LLM Vision integration that you just added in HACS > click Install > restart Home Assistant.

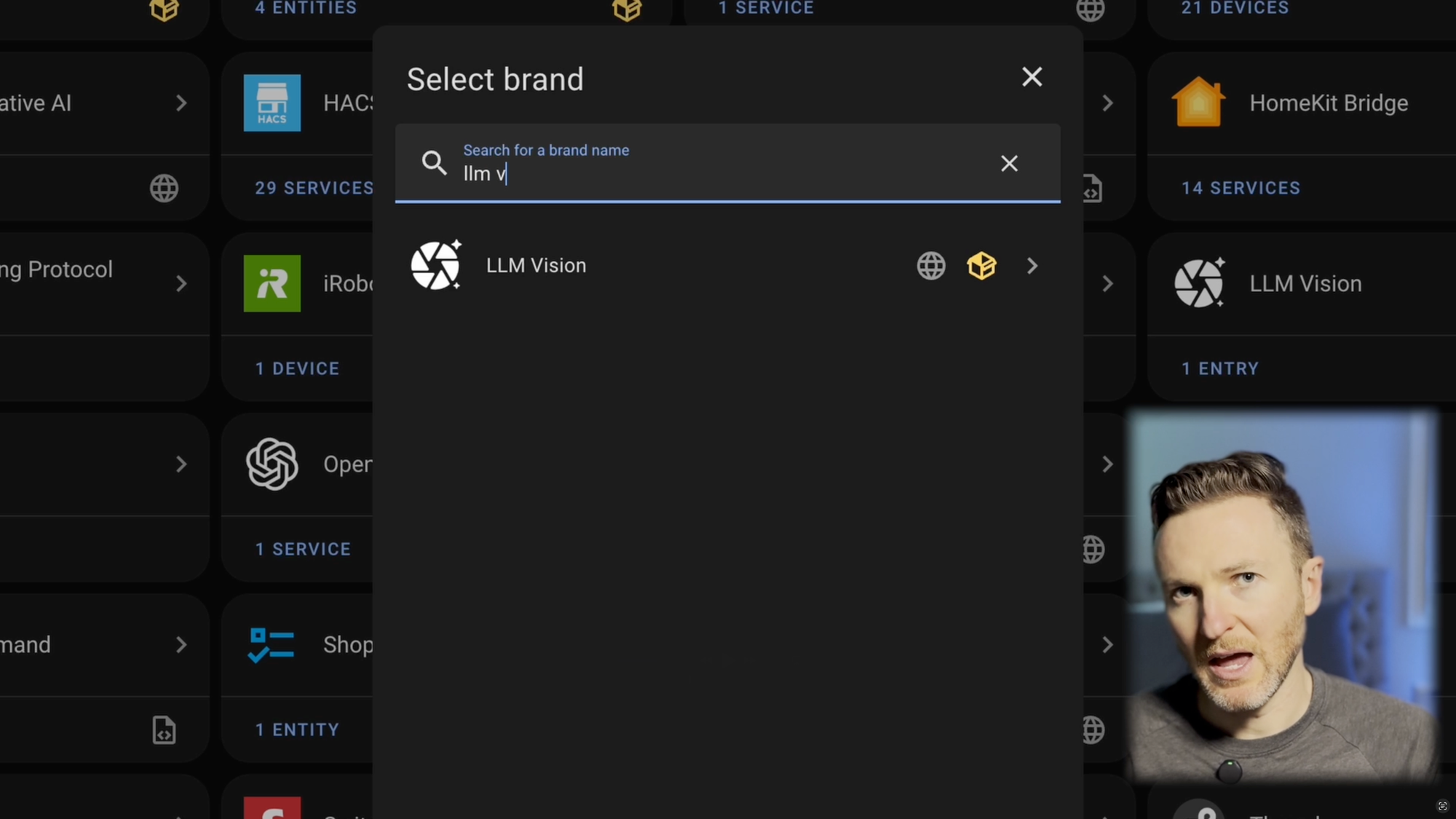

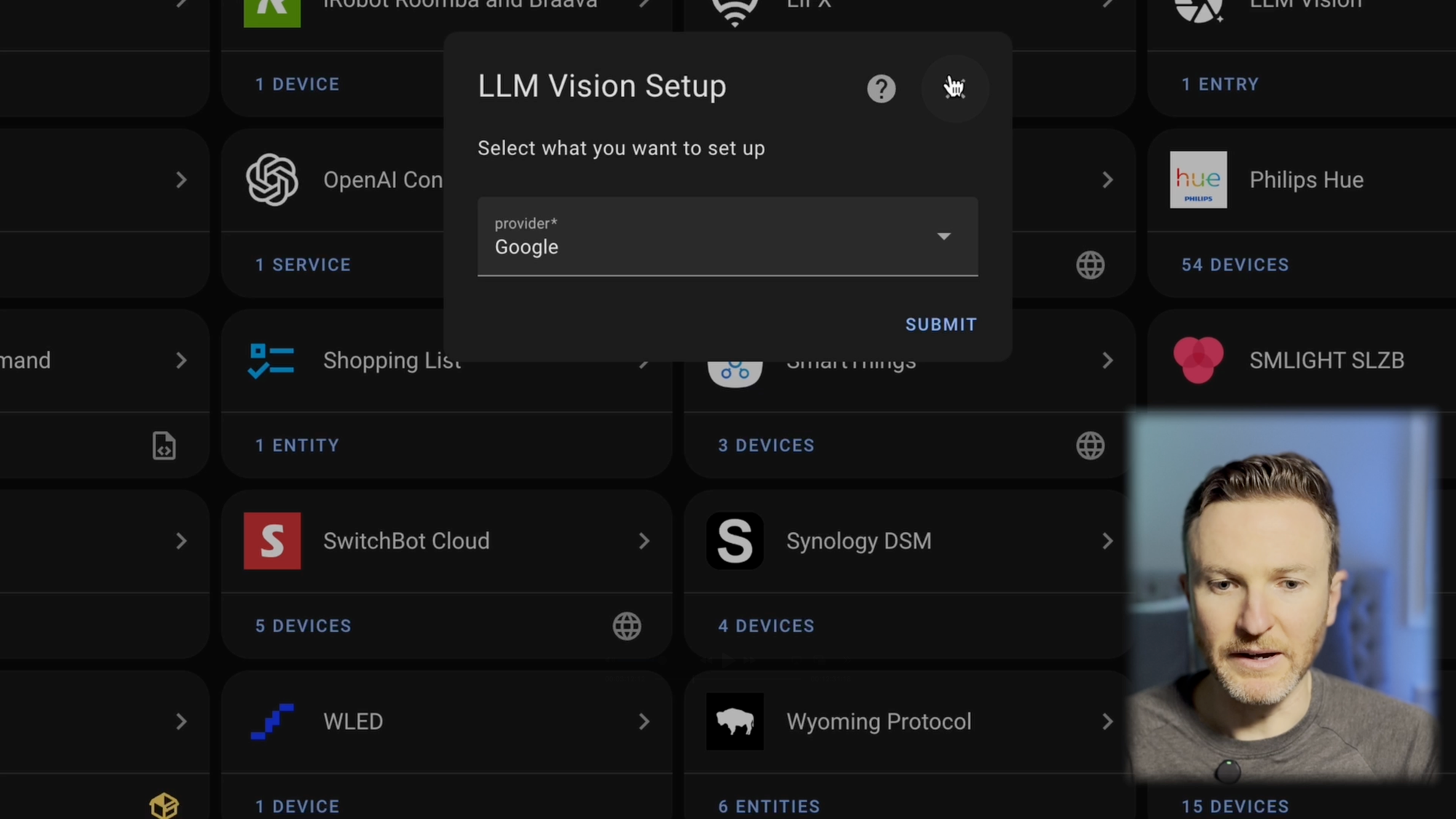

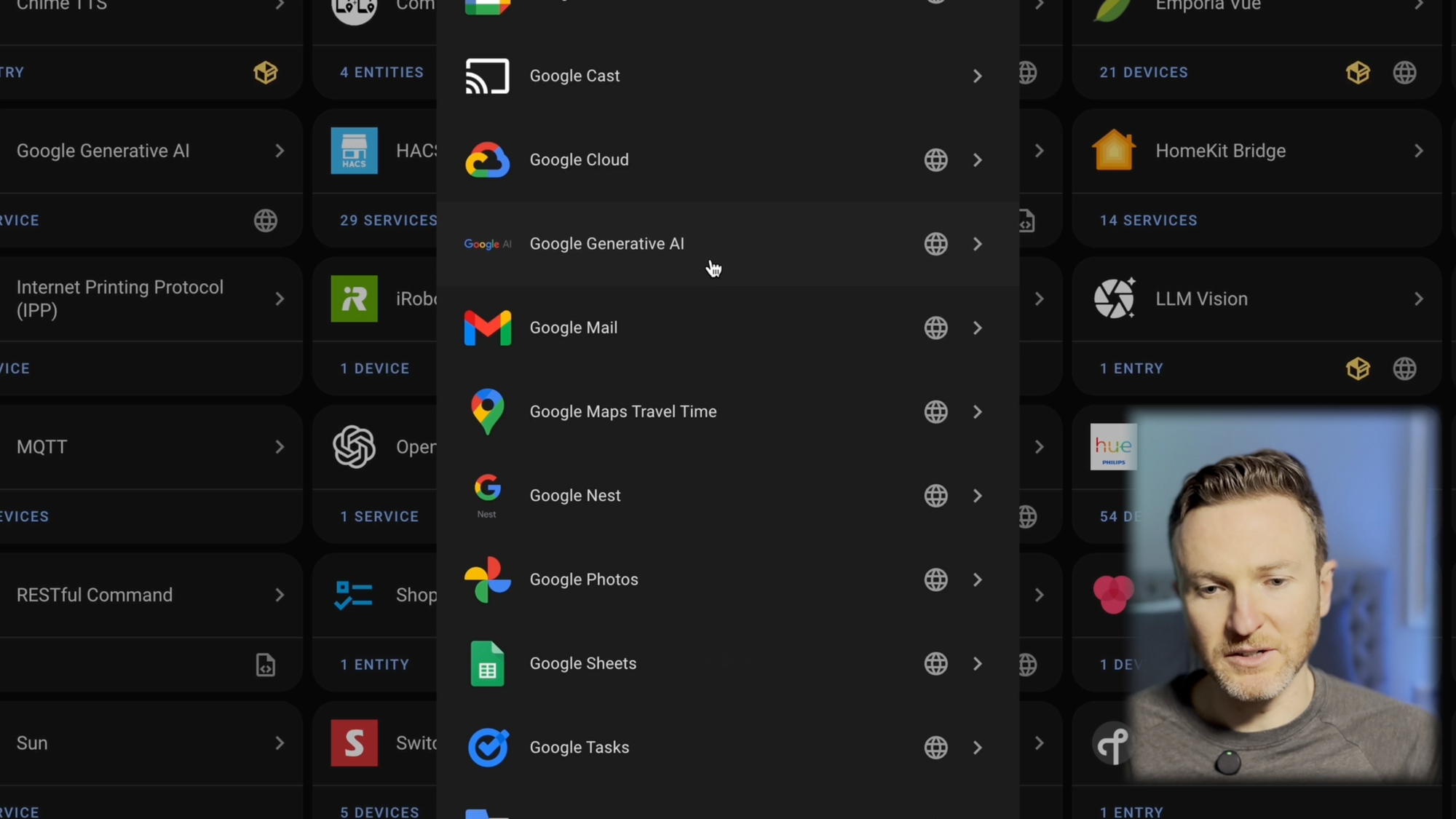

- Once Home Assistant restarts, go to Settings > Devices & services > Add integration > search for LLM Vision > open it > select your desired model.

Add LLM Vision to Home Assistant from HACS, and select your preferred LLM provider

Adding an LLM

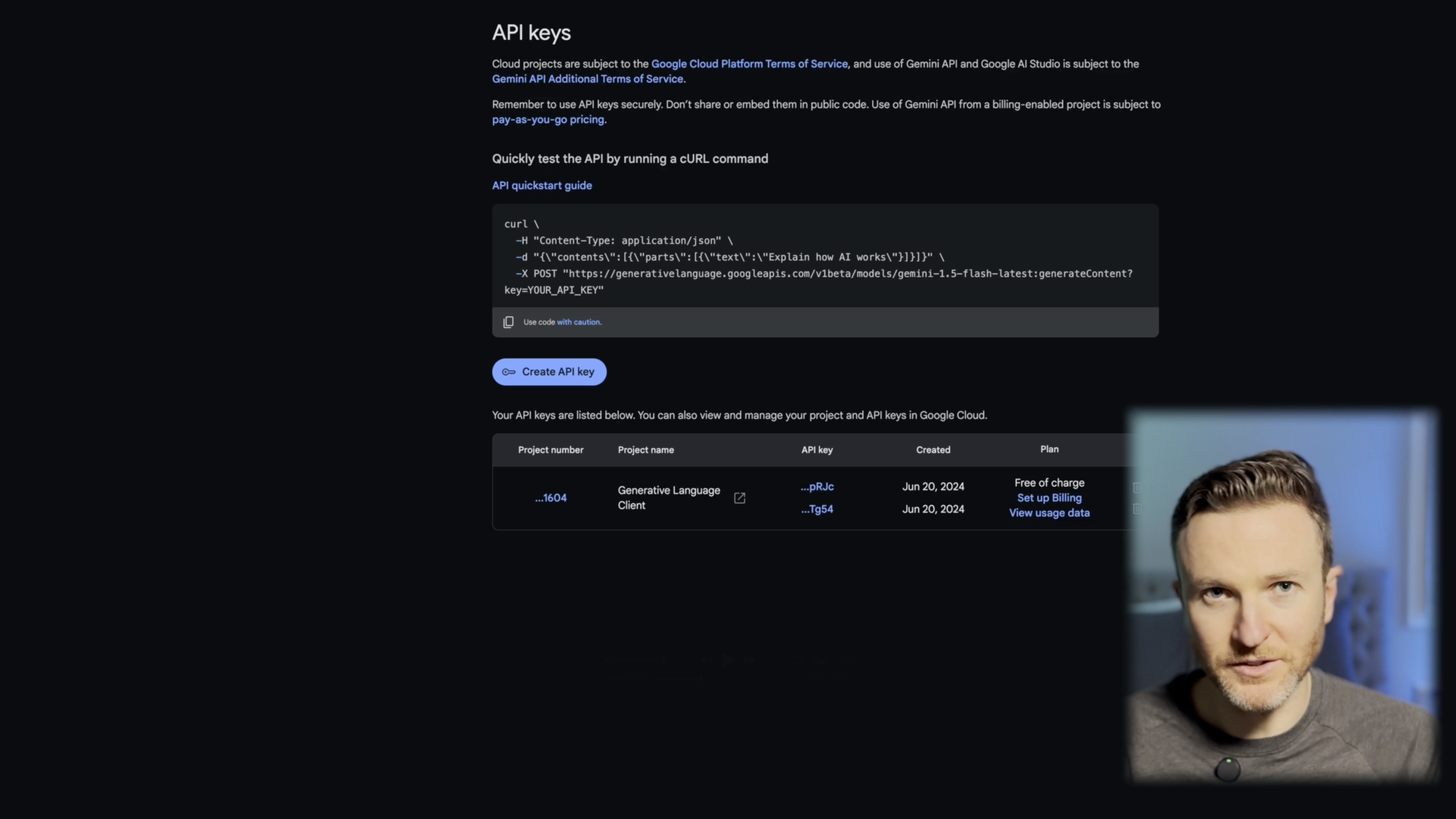

If you don’t already have an LLM added to Home Assistant, I’ll show you how to do this for Google Gemini, but the process is similar for ChatGPT.

- Visit the Google AI Studio and generate an API key.

- In Home Assistant, go to Settings > Devices & services > Add integration > search for Google > select Google Generative AI > paste the API key.

At this point, you should be ready to start testing out LLM Vision in Home Assistant.

Add your preferred LLM to Home Assistant by generating an API key

Testing out LLM Vision

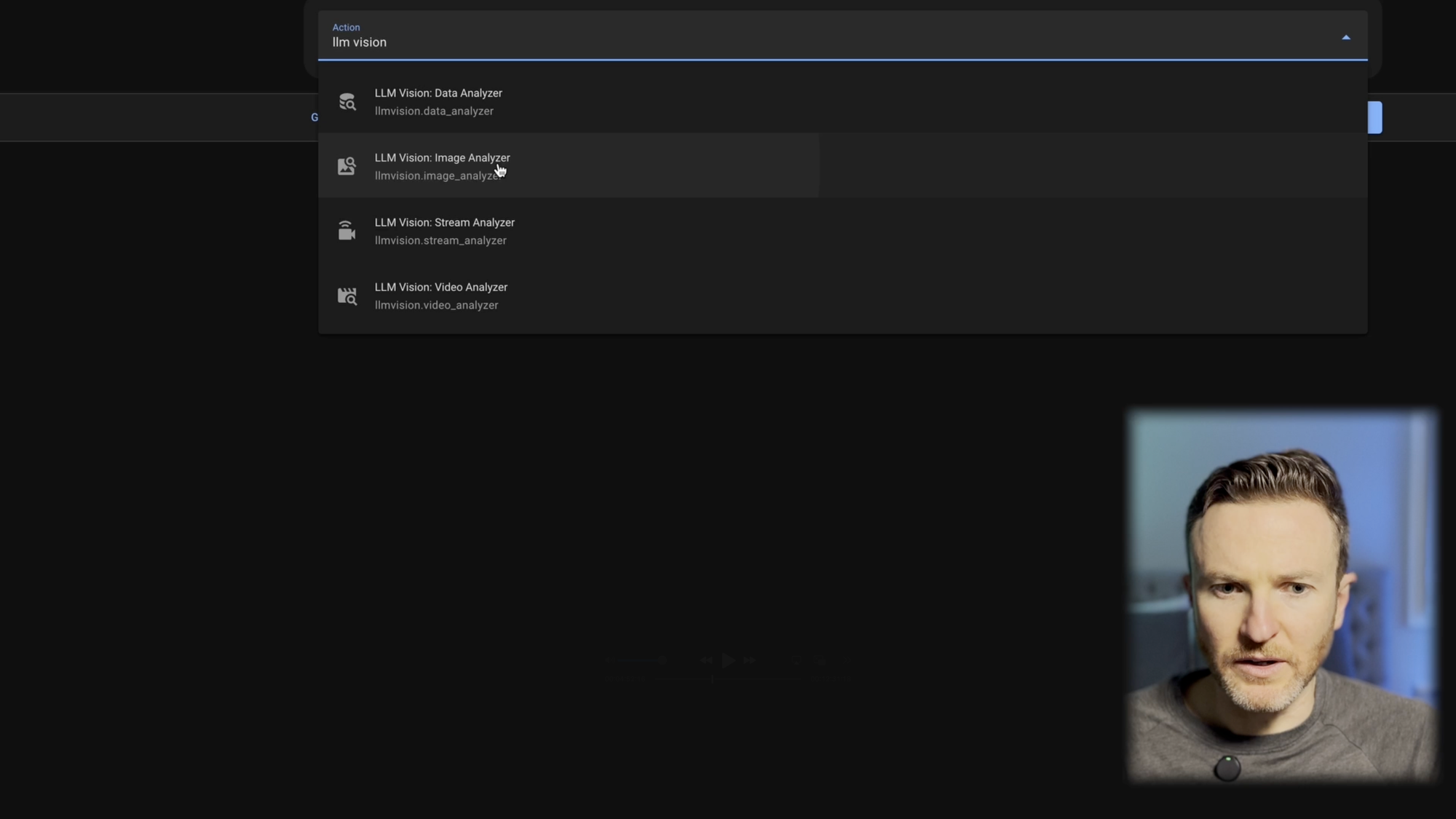

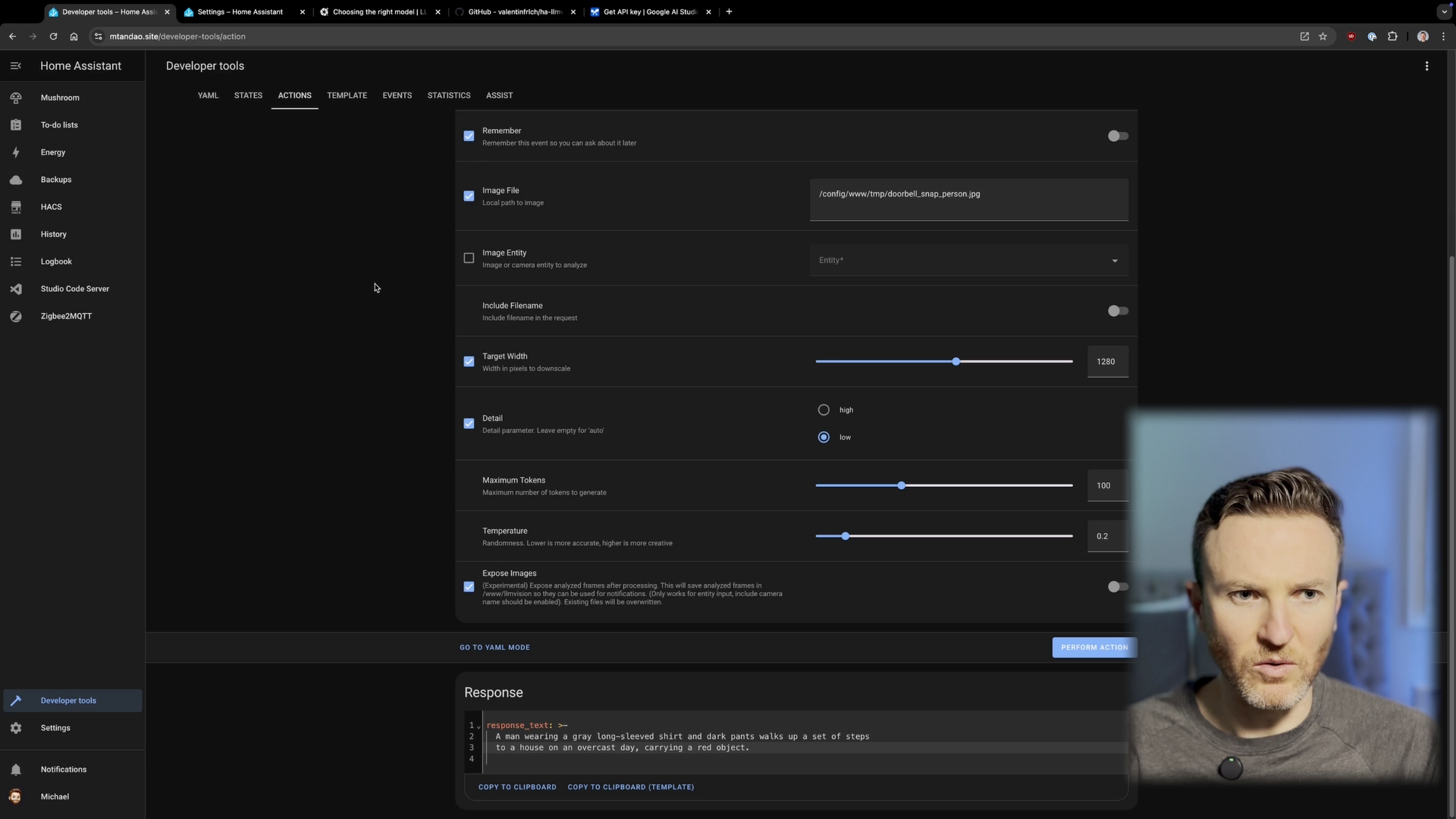

- To do this, visit Developer Tools > Actions > search LLM Vision. You can select LLM Vision: Image Analyzer, for example.

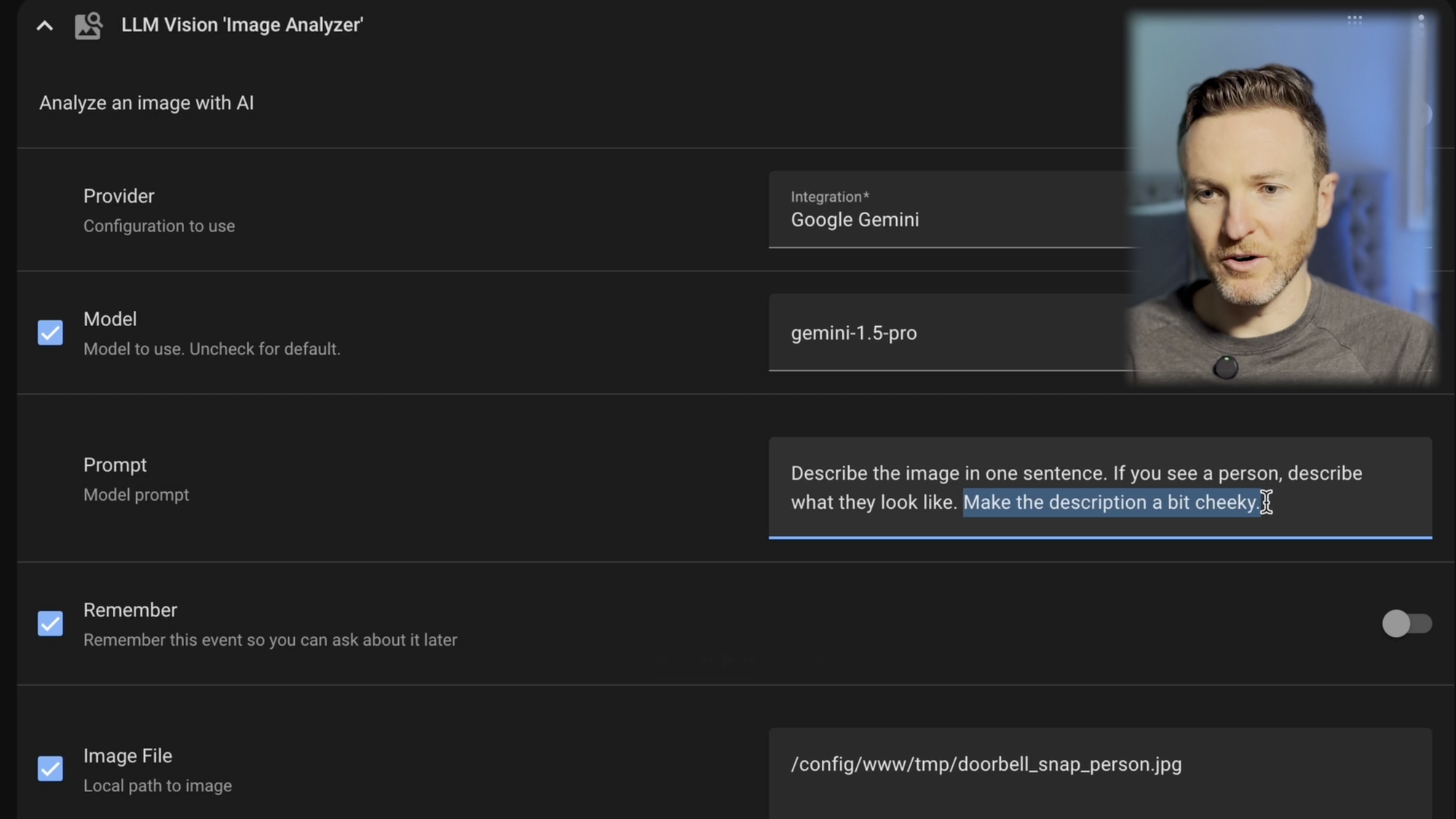

- For the Provider, select the LLM that you previously added, such as Google Gemini.

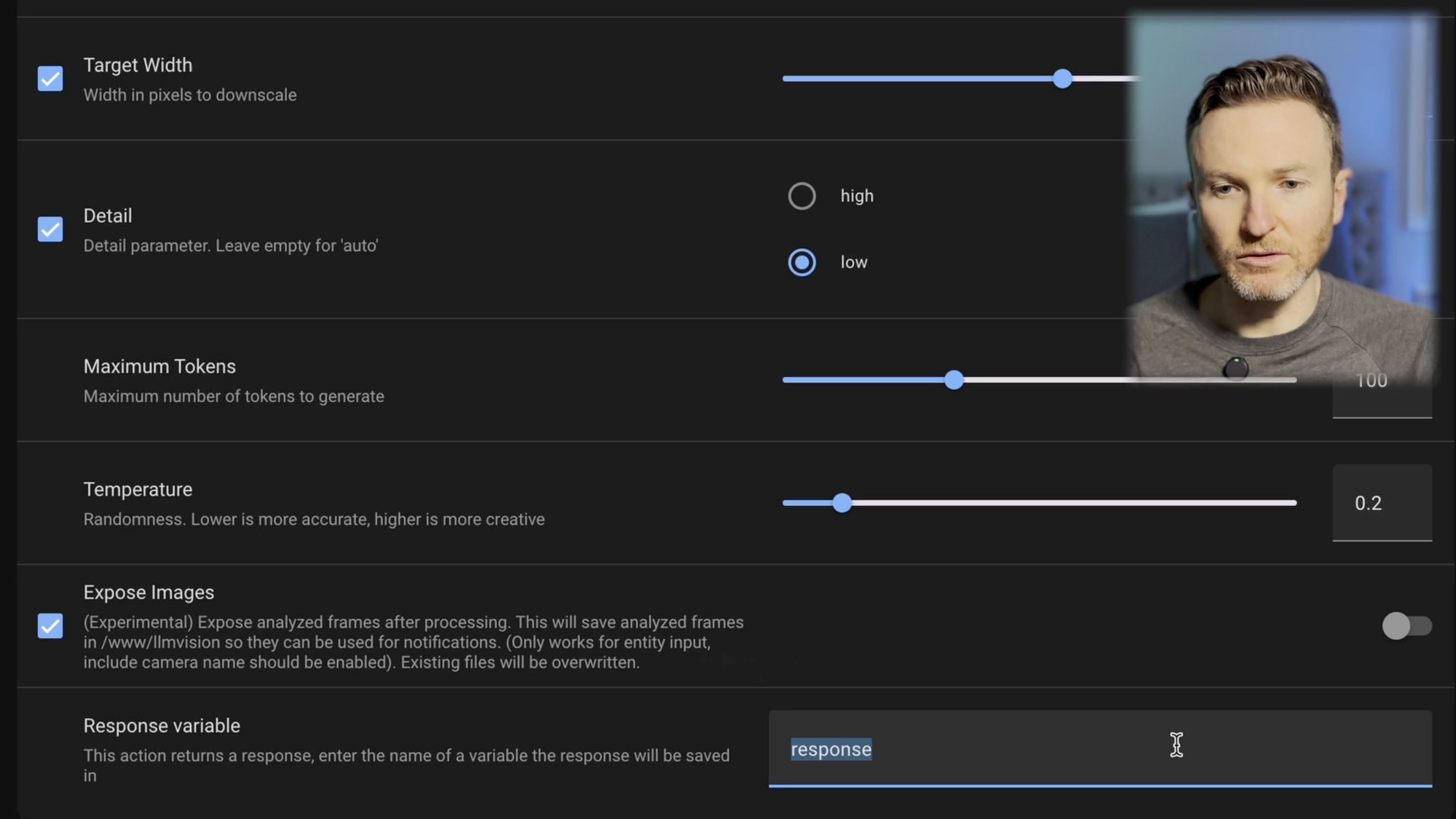

- You can leave Model blank, or add in your preferred model based on this provider list.

- For the Prompt, this is where you tell the LLM what you want it to do such as, “Describe the image in one sentence. If you see people, describe their appearance.”

- You can leave “Remember” checked if you want the option to refer back to this image analysis later, but it’s unnecessary.

- Add the path to where your camera snapshot images are stored in Image File. This may look like: /config/www/tmp/YOUR_IMAGE_NAME.jpg

- If you are just analyzing an image and not a live stream, you can leave Image Entity blank.

- The other settings may be left alone.

- Click Perform Action to see a response from the LLM after it analyzes your image.

Testing out LLM Vision Image Analyzer in Developer Tools

Home Automation

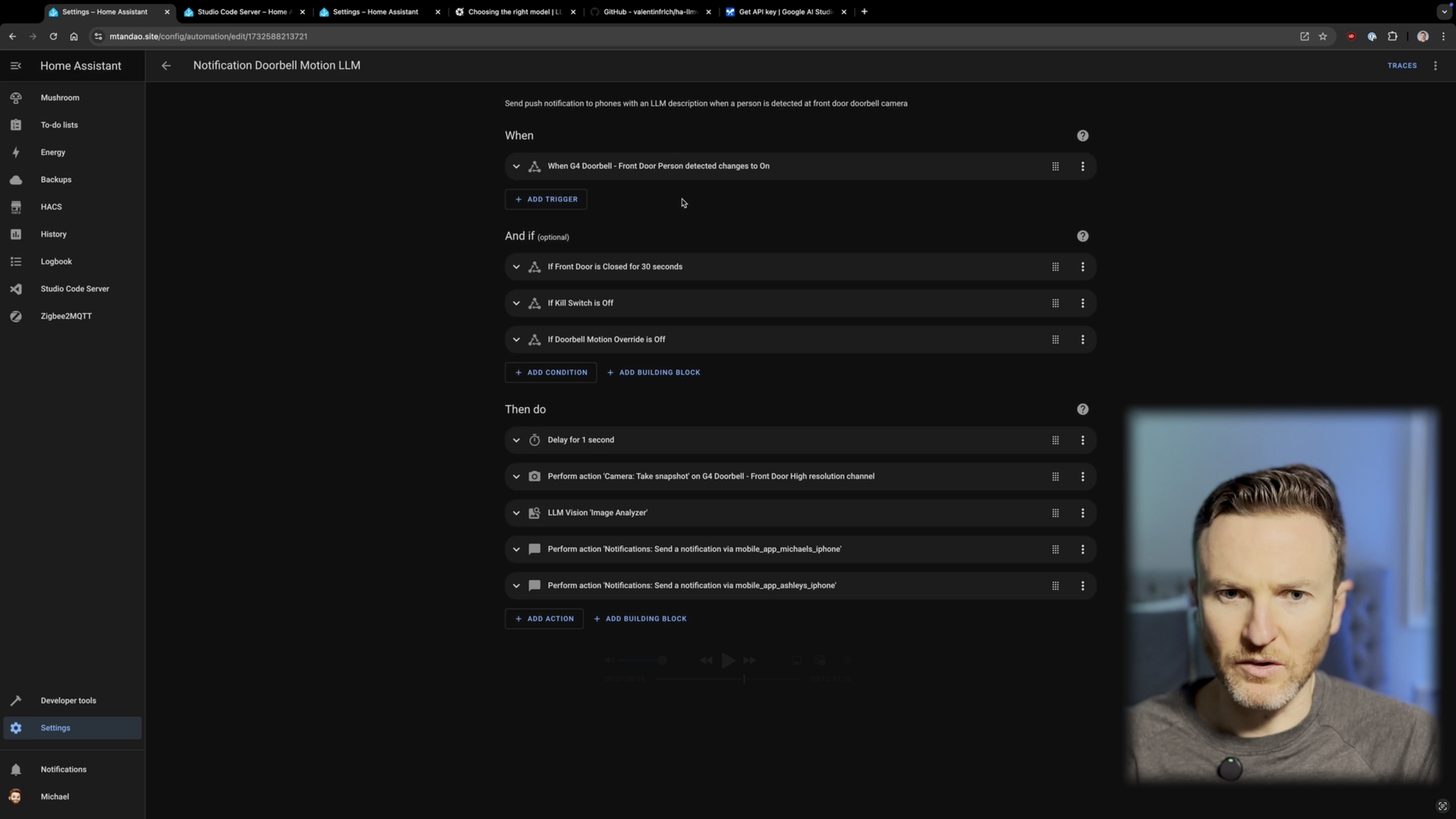

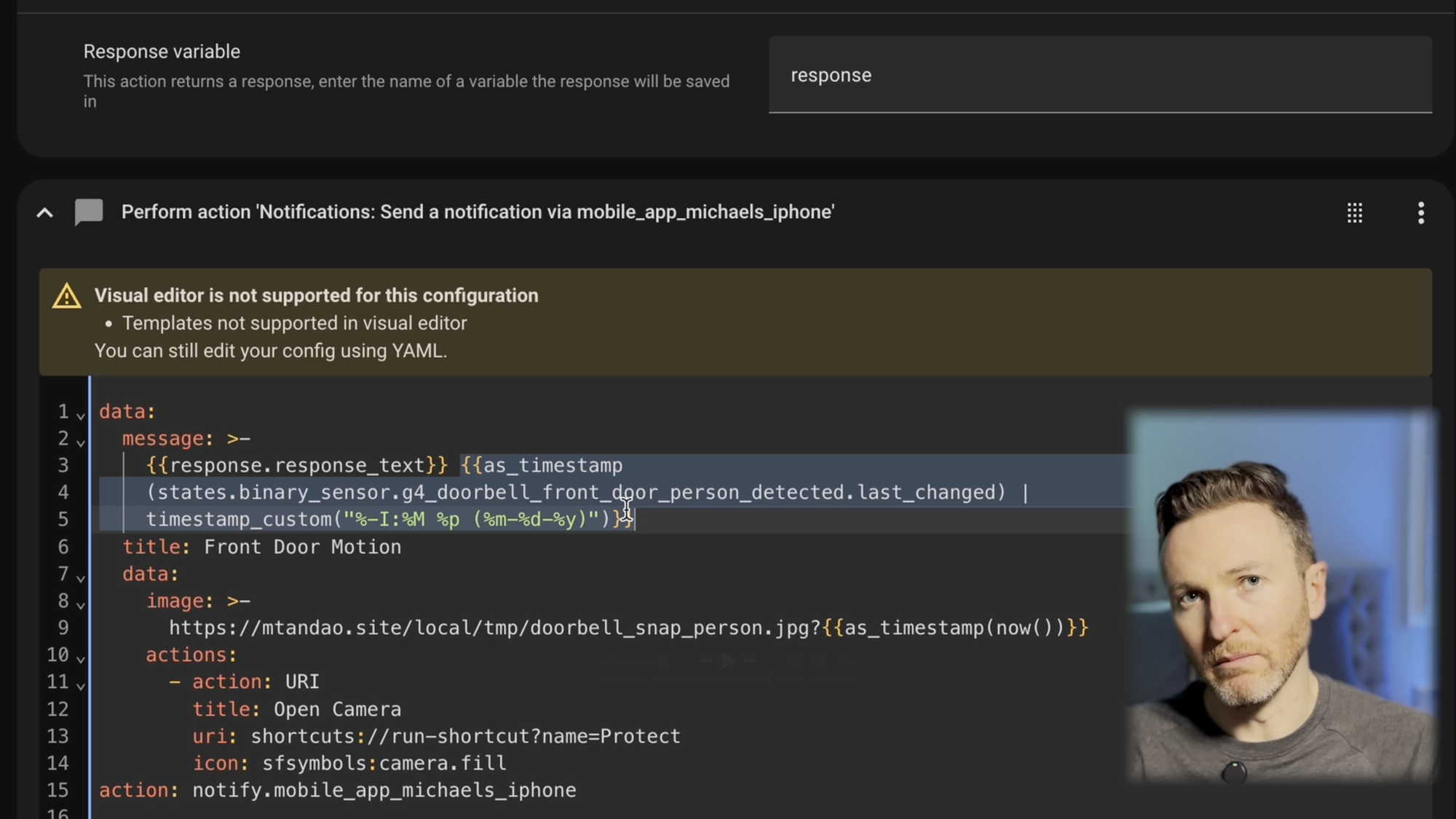

Below is an example of how I am using this in a home automation. This automation sends me and my wife a push notification on our phones when a person is detected at the video doorbell. LLM Vision improves upon such notifications by describing what is actually in the image. If you’d like to have some fun, you can add something to your Prompt such as, “Be a bit cheeky,” as I did.

To make it easier, you can grab the YAML code I am using here.

Creating a home automation to send a push notification to my phone with a person is detected a the front door, with an LLM-generated description of that person

Use Cases

But it’s not just about video doorbell events, or humor. You could have it tell you other, useful things, like:

- Tell you if the trash bins have been moved to the curb

- Recognize cars based on license plates

- Determine if a UPS driver already visited

- Get a summary of what is happening around your house

- Leave an alert on a smart home dashboard that you have a package outside waiting for pick-up

Final Thoughts

Before using this, having a person detected at the front door was a rather mundane thing. Now, I look forward to whatever hilarious description the LLM is going to come up with next. My wife and I are often cracking up at the descriptions. They’re not only useful, but a lot of fun. And I’m eager to see what other ways I can put this to use in my smart home, plus ideas that others share in the Home Assistant community.

Watch on YouTube

Featured Tech